Storage (Local, iSCSI and SAN)

In this section I will be covering different types of storage (see below), I will also be discussing the Virtual Machine Filesystem System (VMFS) and how to manage it.

- Local Storage

- iSCSI

- NFS

HBA Controllers and Local Storage

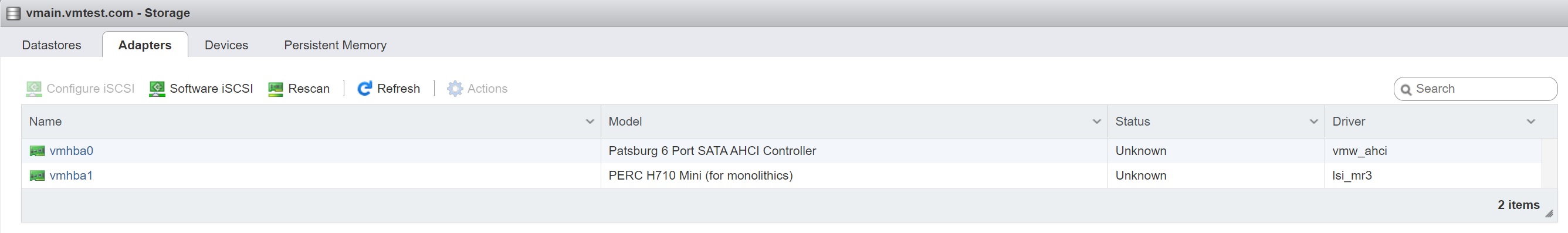

Once the installation has finished all the storage controllers will be configured, as you can see below the DELL R620 has two default storage controllers, one has the cdrom attached the other has the disk storage attached. You can also have a iSCSI adapter which I will walk through setting up to a synology SAN later.

Next we look at the devices that are attached to controllers, you can see in the below screenshot i have a cdrom and 3 x SSD disks connected. The top drive contains the ESXI operating system, the other two can be used to host virtual machines.

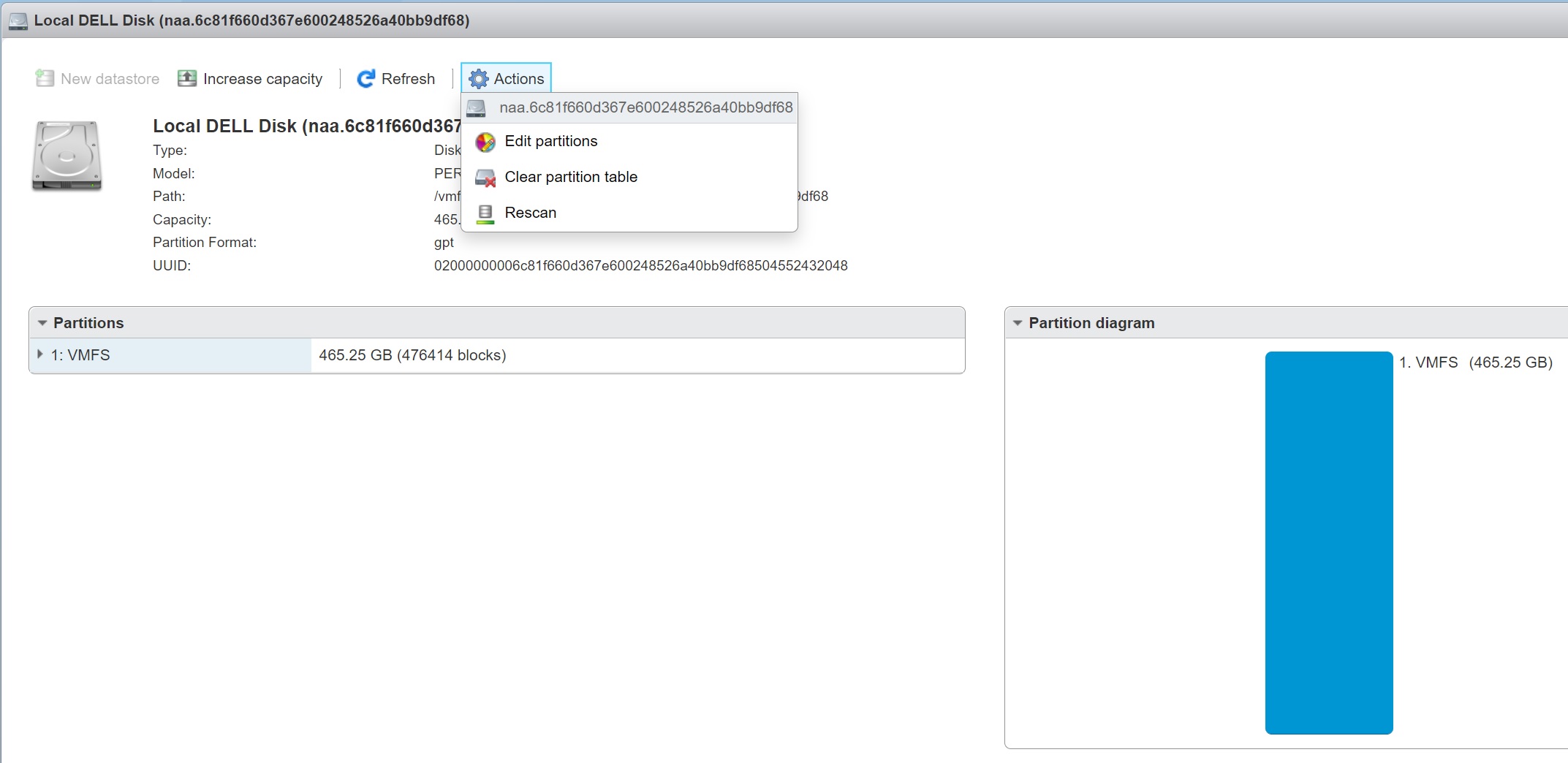

Selecting a device will provide lots of details, you have the option to edit the existing partitions or even clear clear it making the device available for re-partitioning.

Once you have a free device you can create a datastore on it which then means its available for virtual machines to be created on it, as you can see below I have two datastores

iSCSI is now the default way to connect to a Storage Area Network (SAN), the network is used to carry the SCSI commands to the SAN and thus disk, network port 3260 is used. iSCSI uses the normal network cabling infrastructure to carry the SCSI commands, this means you do not need any additional equipment, however you should have at least 1GB or more commonly now 10GB networking. VMware iSCSI software is actually part of the CISCO iSCSI Initiator Command Reference, the entire iSCSI stack resides inside the VMKernel. The initiator begins the communications (the client) to the iSCSI target (disk array), a good NIC will have what is called a TCP Offload Engine (TOE), which improves performance by removing the load from the main CPU.

There are a number of limitations with iSCSI that you should be aware of

- You can install ESX to iSCSI LUN for iSCSI booting purposes, but you must use a supported hardware initiator

- Only the hardware initiator supports a static discovery of LUN's from the iSCSI system. Since dynamic discovery is much easier to setup and works with both software and hardware initiators

- There is no support for running clustering software within a virtual machine using iSCSI storage

iSCSI does support authentication using Challenge Handshake Authentication Protocol (CHAP) for additional security and iSCSI traffic should be on its own LAN for performance reasons.

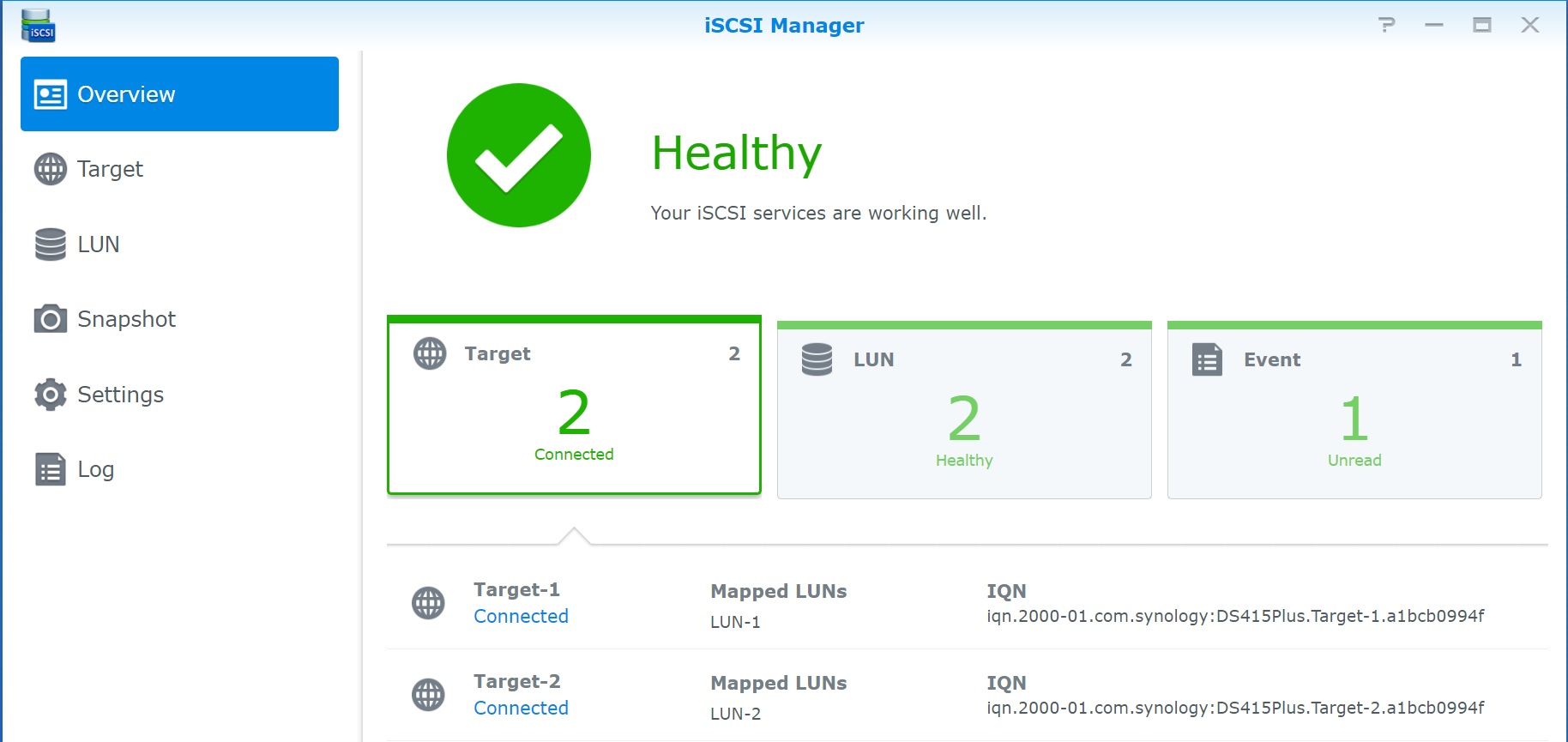

I have setup a couple of LUN's in my Synology SAN, and setup the targets to allow the ESXi server to connect, you can using mapping to secure the LUN's making sure only specific ESXi servers can access the specific LUN's.

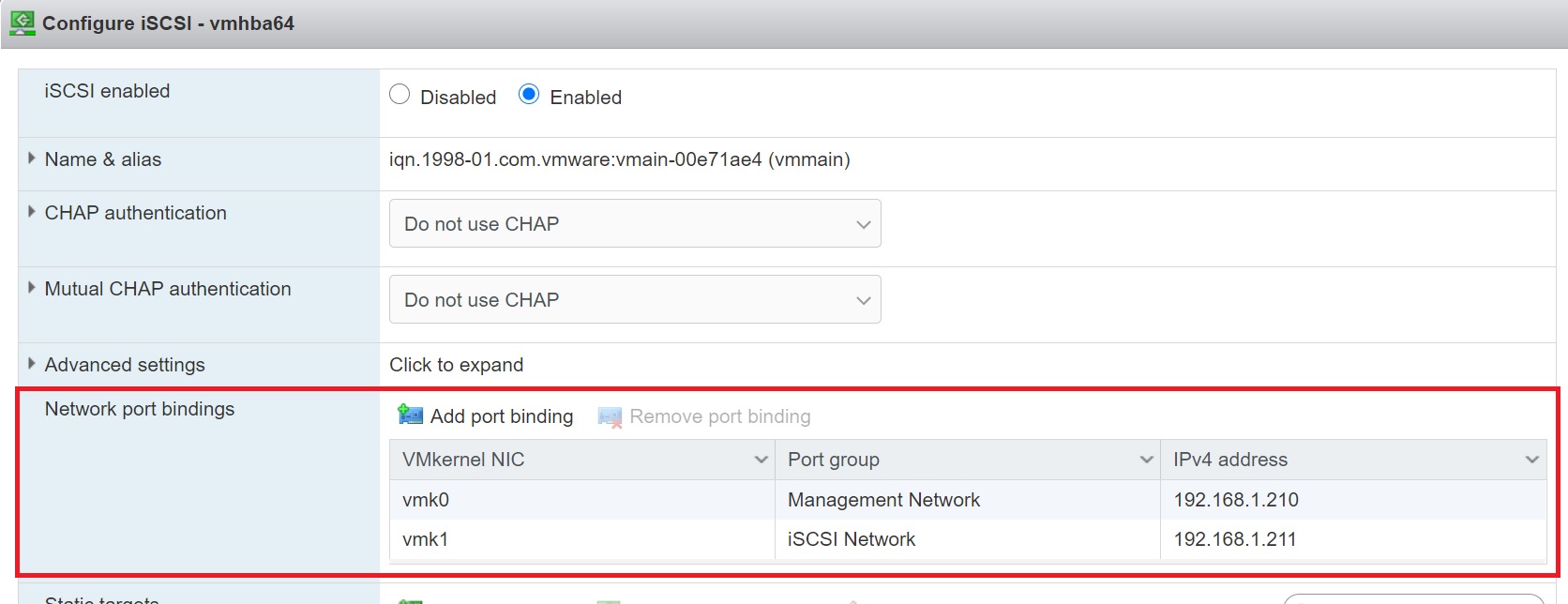

iSCSi has it own naming convention, its uses the iSCSI Qualified Name (IQN), it a bit like a DNS name or reverse DNS. The below image is my current iSCSI setup as mentioned in my test environment, this is configured when you setup iSCSI using the Software iSCSI icon, I have binded iSCSI to a specifc port and then added the IP address of the dynamic target, once to save the configuration and open it again it should then pick any LUN's that are available, in the below you can see two LUN's where attached.

The IQN cn be broken down as below:

| IQN | My IQN iqn.1998-01.com.vmware:localhost-7439ca68 breaks down as iqn - is always the first part Basically the IQN is used to ensure uniqueness |

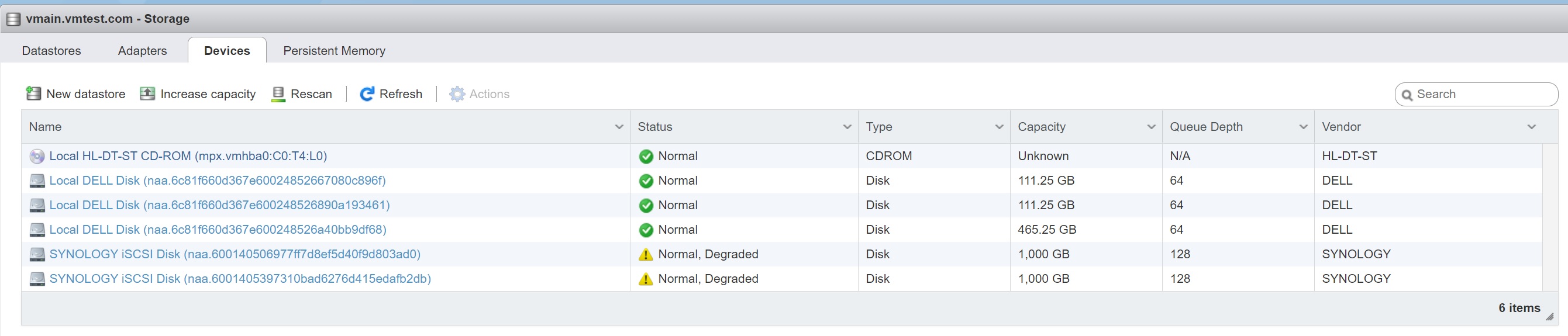

Once the iSCSI has been setup you should be able to see the disk devices in the devices screen, as you can see below the two LUN's (devices) are available, you might notice that they are in normal, degraded mode, in my case they have only a single network path and should that path go down then i will lose the connection to these devices.

iSCSI is very easy to setup on VMware, the main problems normally resides in the iSCSI server/SAN and granting the permissions for the ESXi server to see the LUN's (this is known as LUN masking), I will point out again that you should really use a dedicated network for your iSCSI traffic this will improve performance greatly.

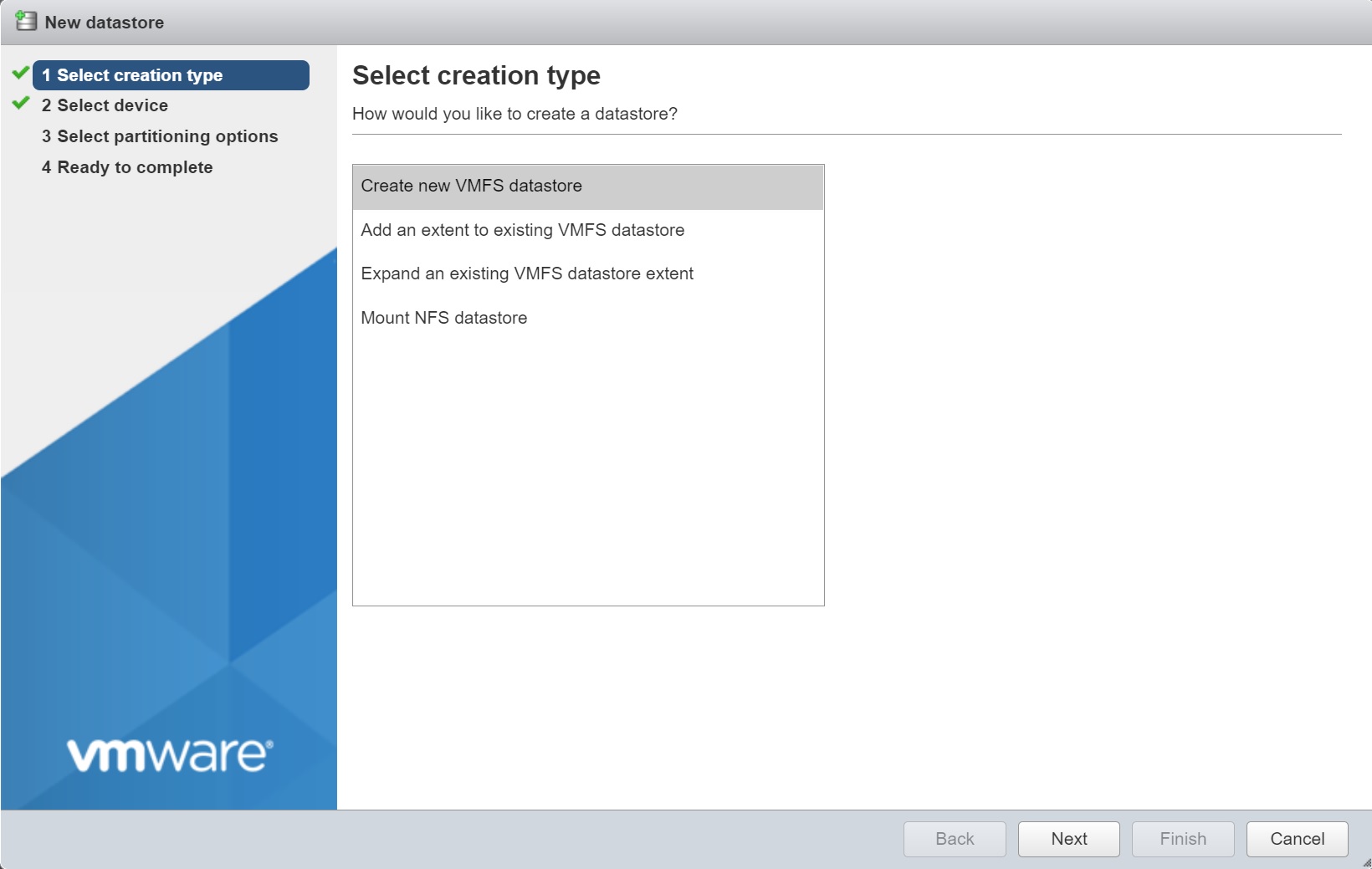

Next we can create datastores on the devices which means that they will be available to host VM's on them, you can either create datastores from the devices screen or the datastores screen, in my case I am creating a new datastore

Enter the datastore name and select the device to be used

Then select the partitioning type, here I recommend the lastest version

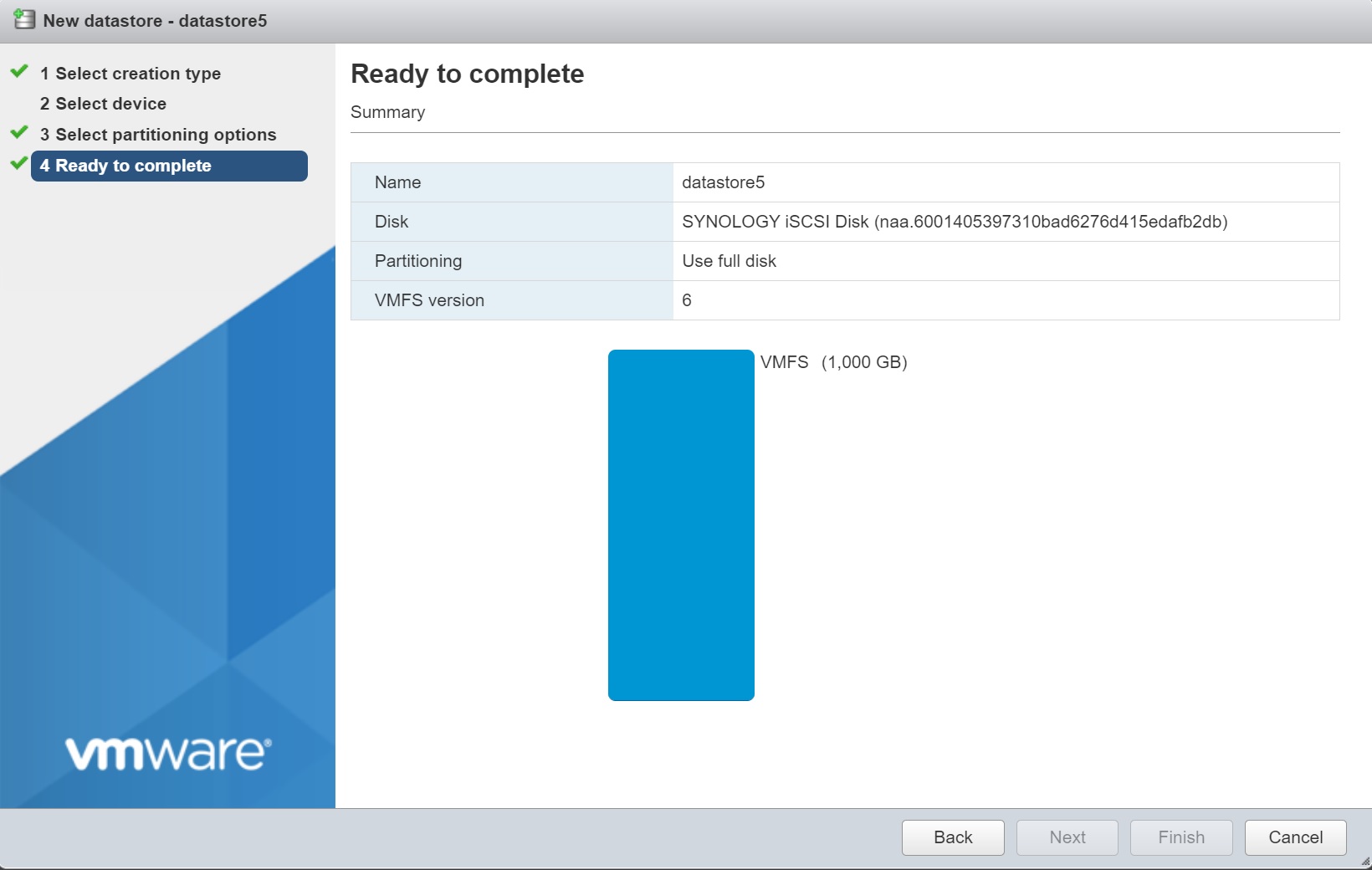

Finally you get a summary screen, select finish to create the datastore

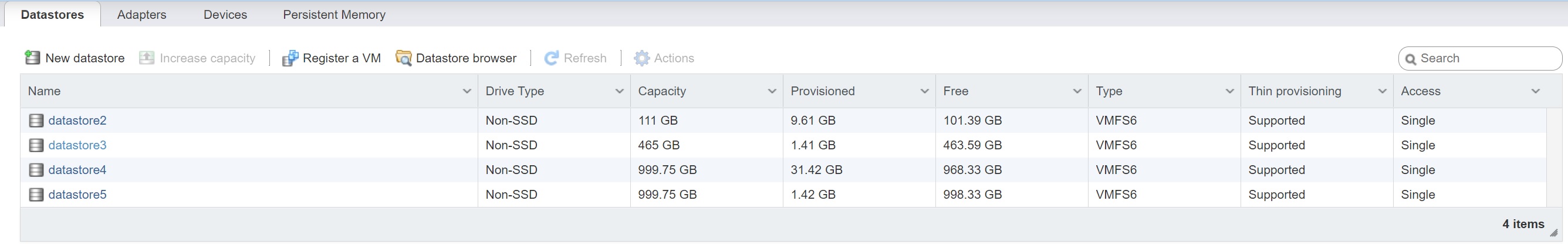

Once the process completes the datastore will now be available to host VM's, as you can see below I have created two datastores with the two LUN's from the Synology SAN.

In the above setup we have the Synology devices that were normal and degraded, this was due to that only one path existed to the Synology SAN, setting up a multi-path to the SAN is simply creating another vmKernel with a different IP address, assign it to a different port group and even a different vSwitch, once setup you then bind it to the iSCSI and thus you have two different paths to get to the SAN and if one were to have any issues the other will takeover.

Now when you view the devices the two synology devices are in a normal state.

NFS seems to be the default choice when it comes to setting up storage, one reason is thats it's very easy to setup and to expand, also when using fibre or iSCSI you will be using VMFS file systems but when using NFS you use NFS file system, below are some reason why you might want to use NFS over iSCSI/Fibre

- Easy Setup

- Easy to expand

- UNMAP is advantage on iSCSI

- VMFS is quite fragile if you use Thin provisioned VMDKs. A single powerfailure can render a VMFS-volume unrecoverable.

- NFS datastores immediately show the benefits of storage efficiency (deduplication, compresson, thin provisioning) from both the NetApp and vSphere perspectives

- Netapp specific - the NetApp NFS Plug-In for VMware is a plug-in for ESXi hosts that allows them to use VAAI features with NFS datastores on ONTAP

- Netapp specific : NFS has autogrow

- When using NFS datastores, space is reclaimed immediately when a VM is deleted

- Performance is almost identical

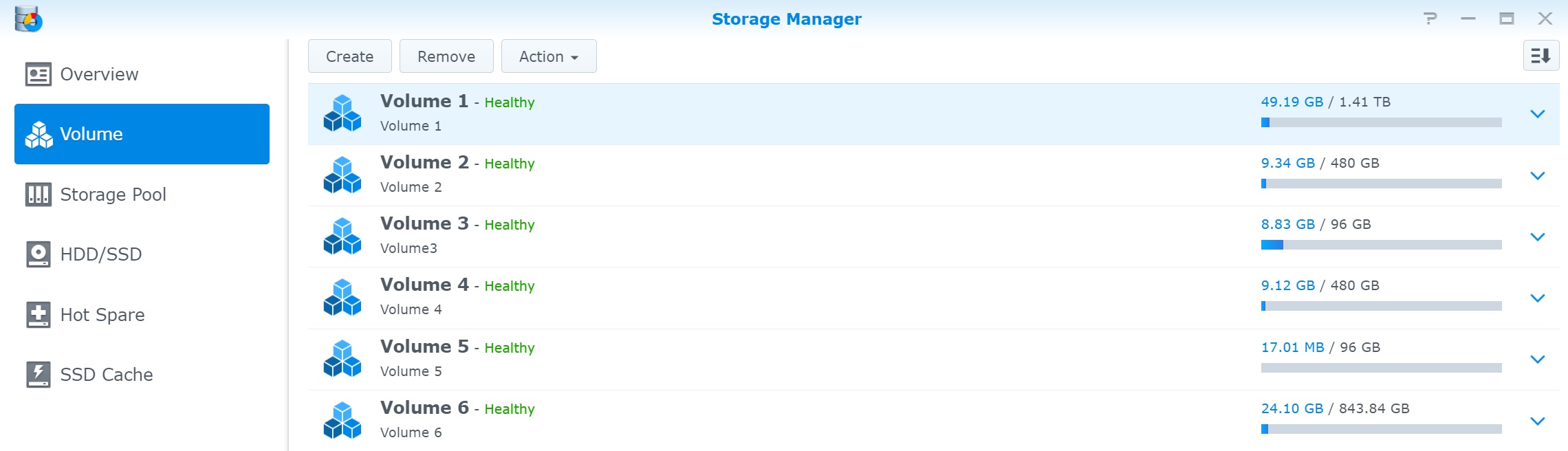

I am using a synology SAN, so I setup a volume, nothing special here

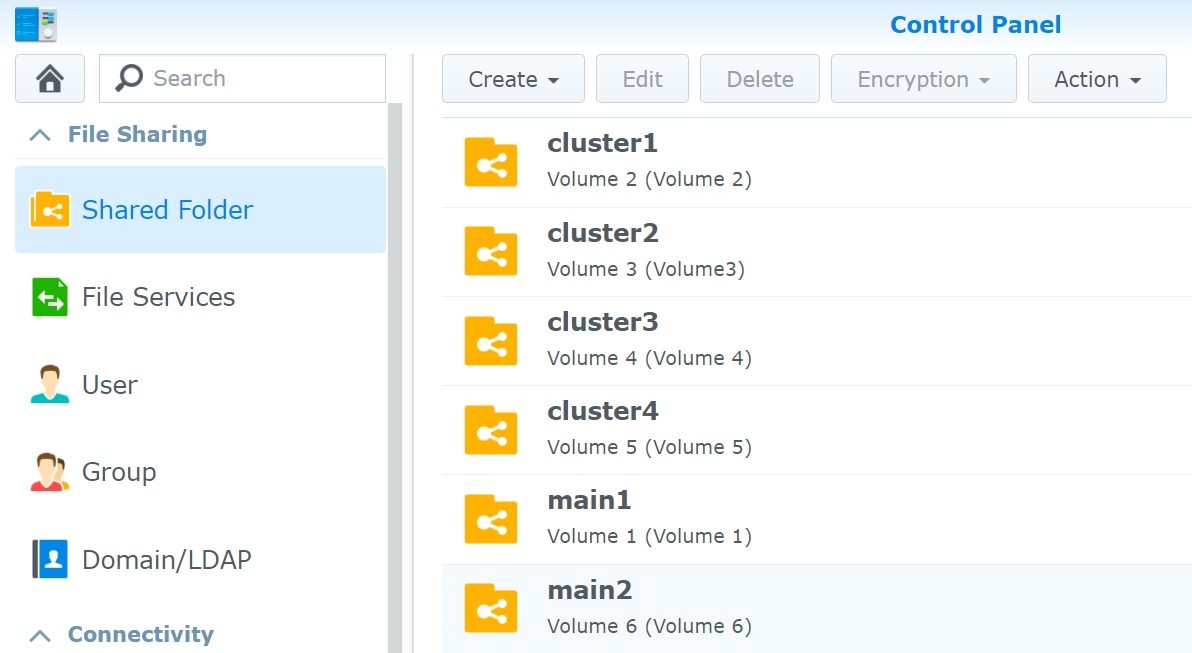

Next I create a shared NFS folder, using one of the above volumes

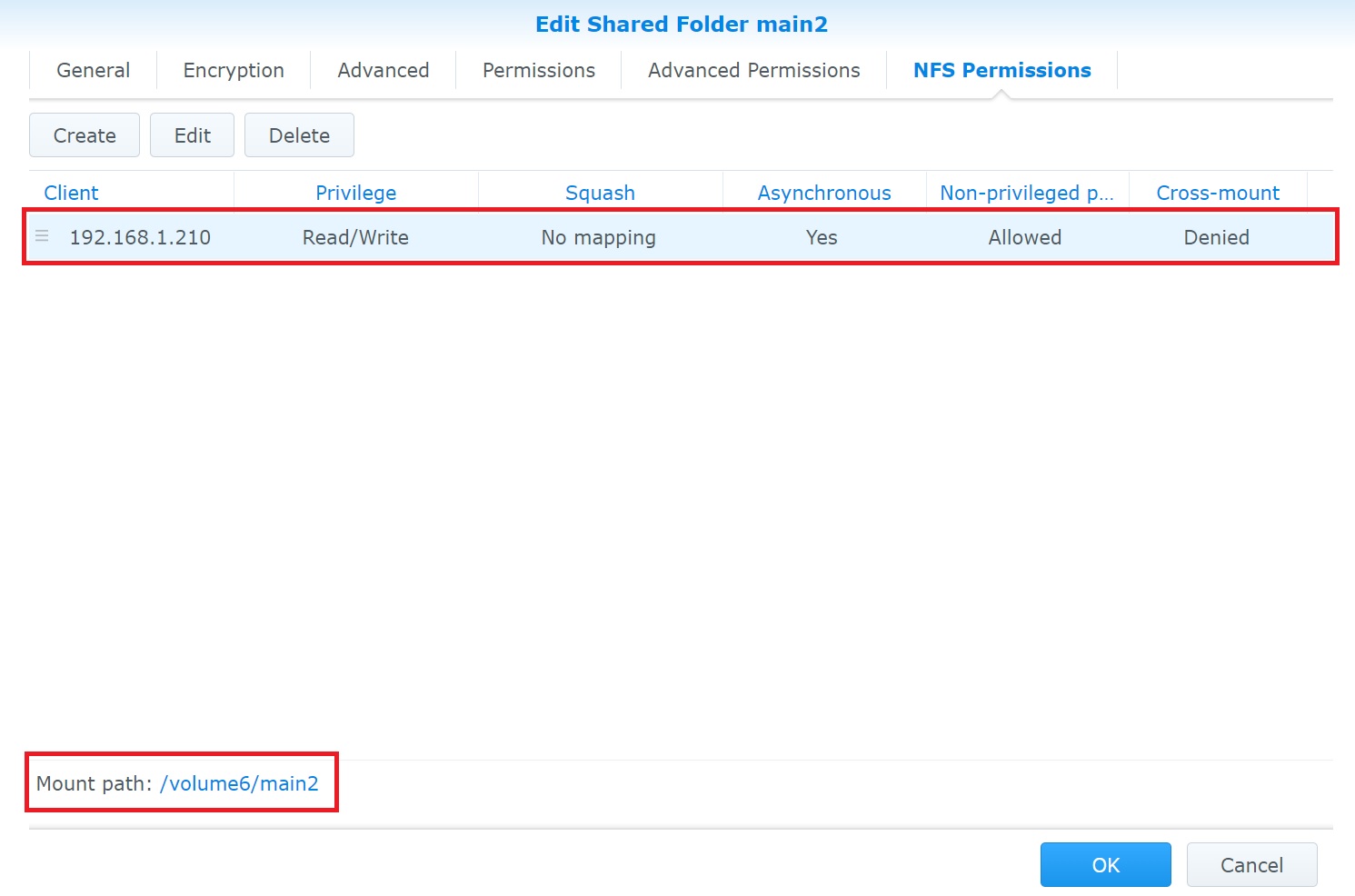

Next we can lock down that volume access using NFS permissions, as you can see below i have onl granted access to one ESXi server, also note the mount path at the bottom, you will need this when you create the datastore

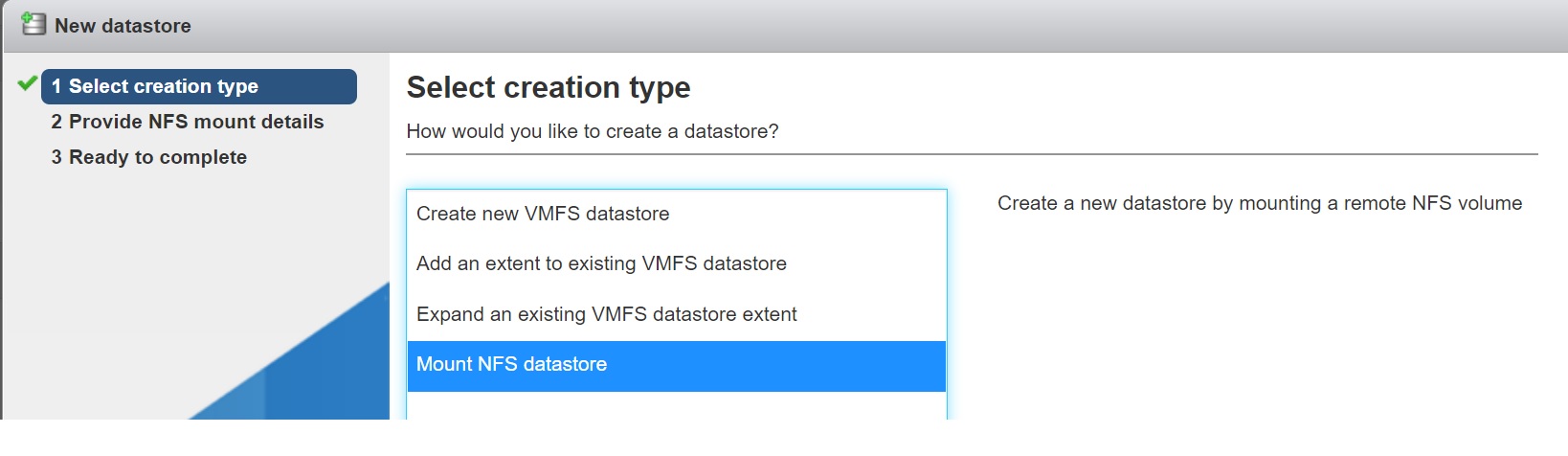

When creating a datastore we select the option to Mount NFS datastore

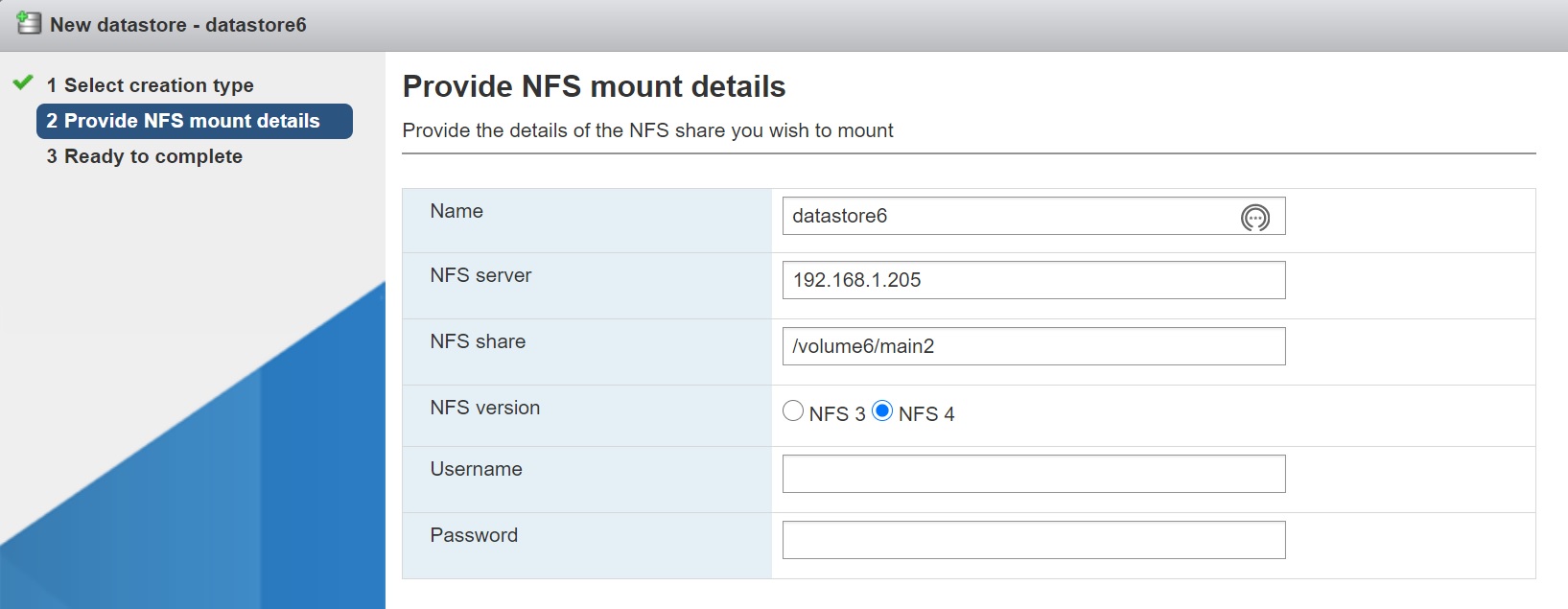

Next we supply the details the the Synology SAN and the mount path, I select the NFS4 version (has features like extra security, performance improvements, multipathing, etc) which has many extra features than version 3.

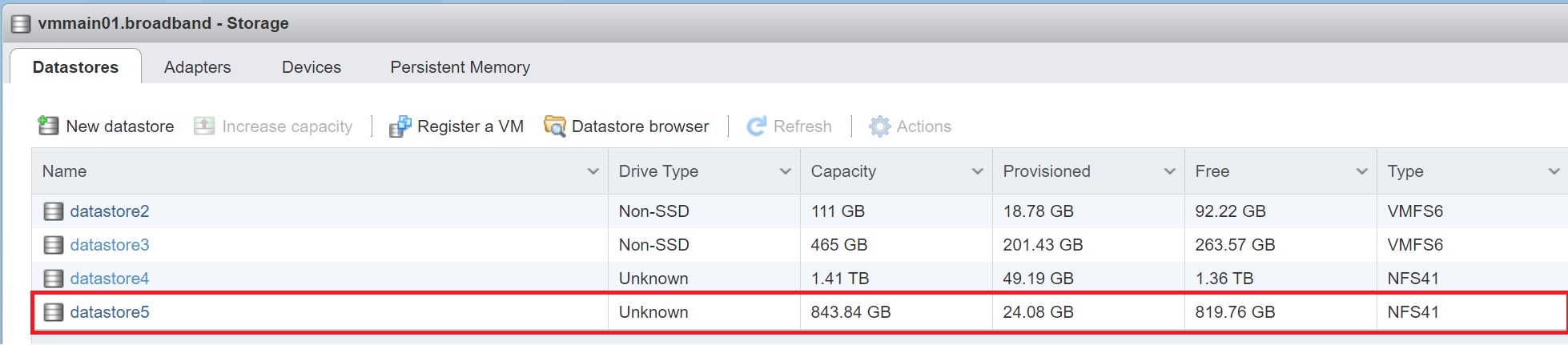

That's it, you have a mounted datastore that you can now use for your VM's

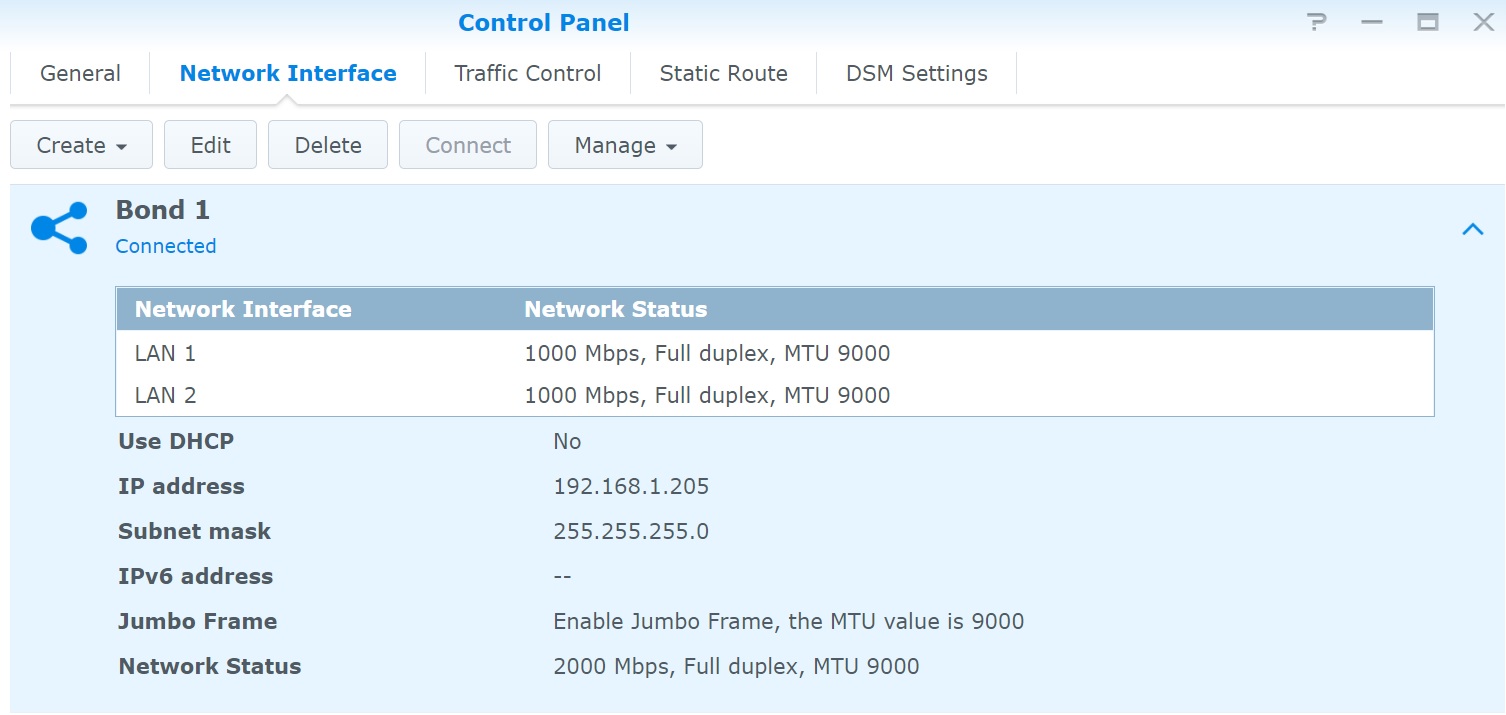

Regarding the multipath, the Synology SAN already provides this, as you can see I have a bonded interface using two NIC's on the Synology SAN itself, you could also create a dedicated switch (also using two NIC's) using MTU 9000 (Jumbo Frames) to increase performance. You can also use the command esxcli storage to add additional IP's addresses to the same SAN as well but depends on how you setup your SAN.