Replication and SRM pre-setup

In this section I describe my test environment that will be used to play around with VMware Replication and Site Recovery Manager (SRM), I have two other sections detailing how to setup Replication and SRM and you have to install in that order. However in this section I do touch on setting up a cluster and the HA components but will add a more detailed section on HA in the future.

I have a Dell R620 server with 2 x E5-2650 and 120GB of ram, I will be using nested ESXi servers and a Synology SAN for the shared storage.

I first tried to use iSCSI but encountered the error below when setting up the replication server and trying to start the VRM service, this could have been due the old synology SAN or using nested ESXi servers, so I decided to use NFS. Comparing the speed and performance I was suprised that they were the same and NFS seems to be alot easier to setup than iSCSI.

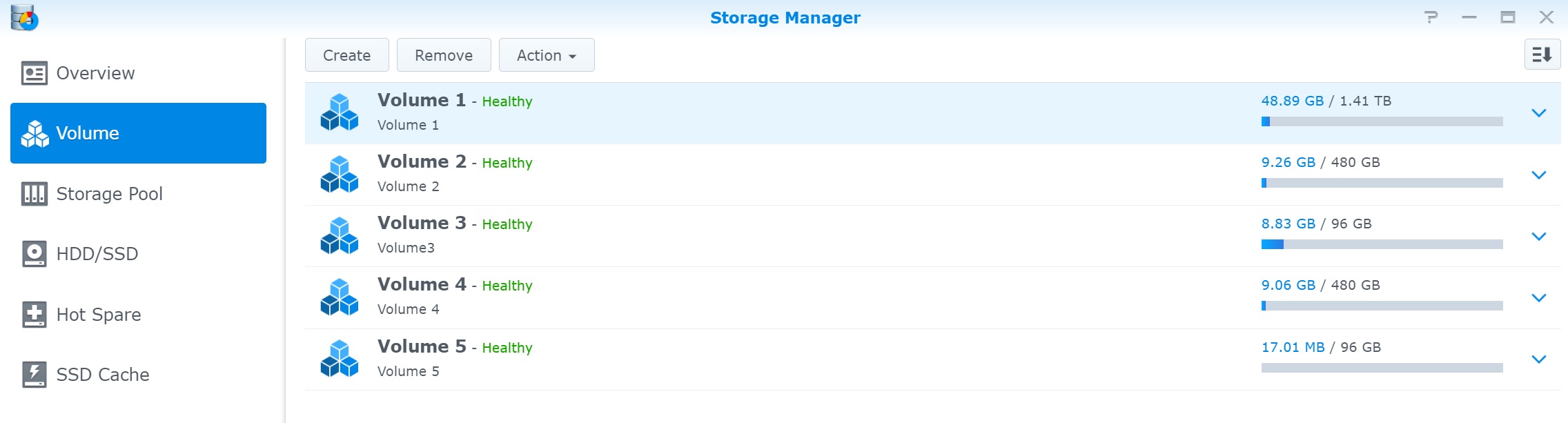

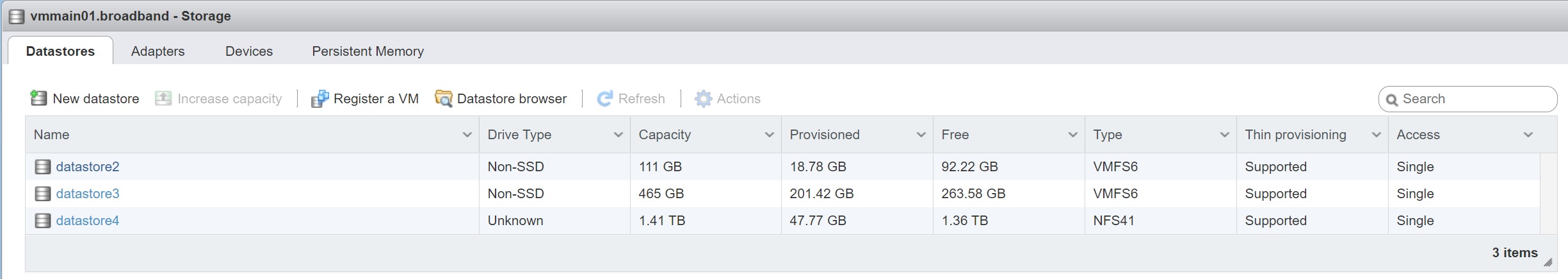

I created 5 volumes, the first will be used by the physical ESXi server and the others by the nested ESXi server/vCenters, the 1.41TB volume will be used by the physical ESXi server to install the vCenters, the 480GB will be used for the replication and site recovery manager servers and any test VM's, the 96GB volumes will be used as a heartbeat for the cluster HA.

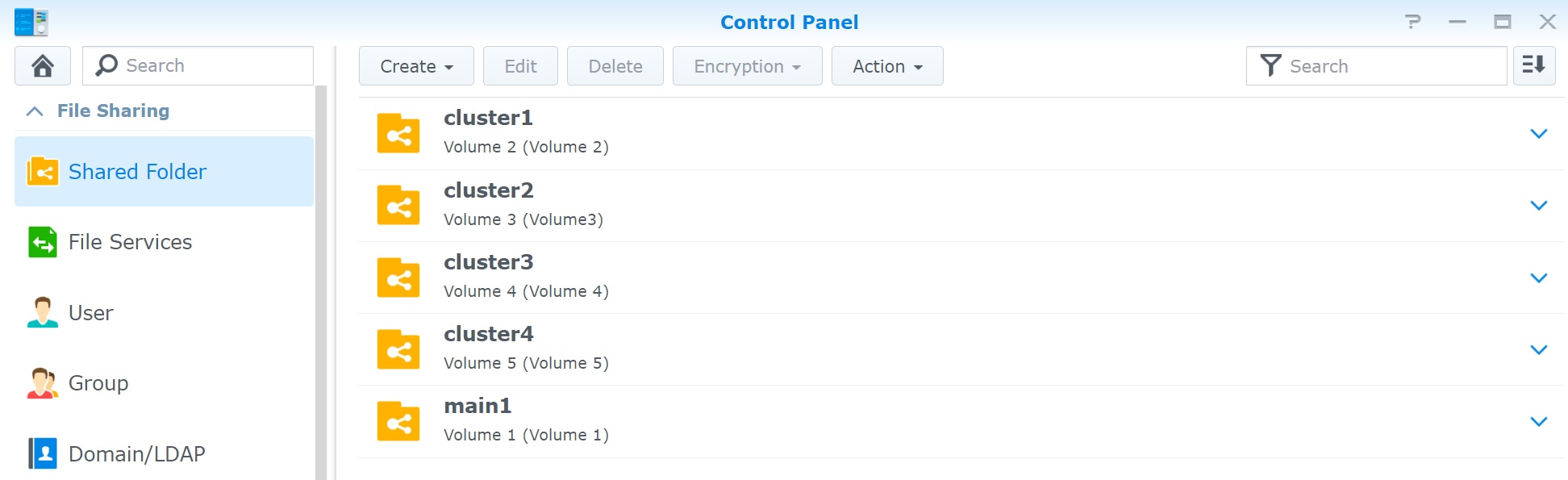

Next I simply create NFS folders on the Synology SAN that will be mounted by the various ESXi servers in my setup

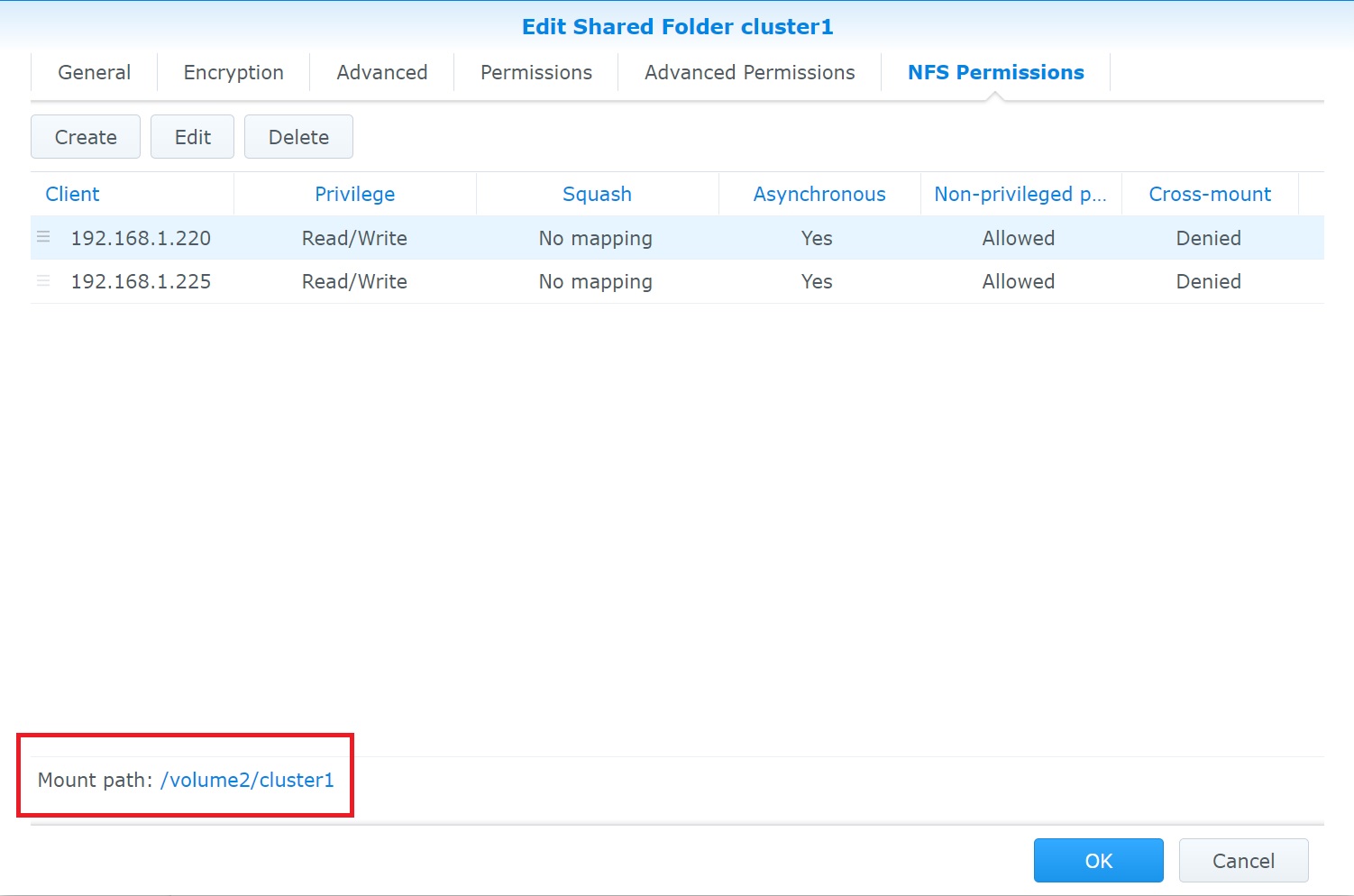

With the synology SAN I can use NFS permissioning to mask the folders so only the servers that need to access can, also make a note of the mount path which is used when creating datastores.

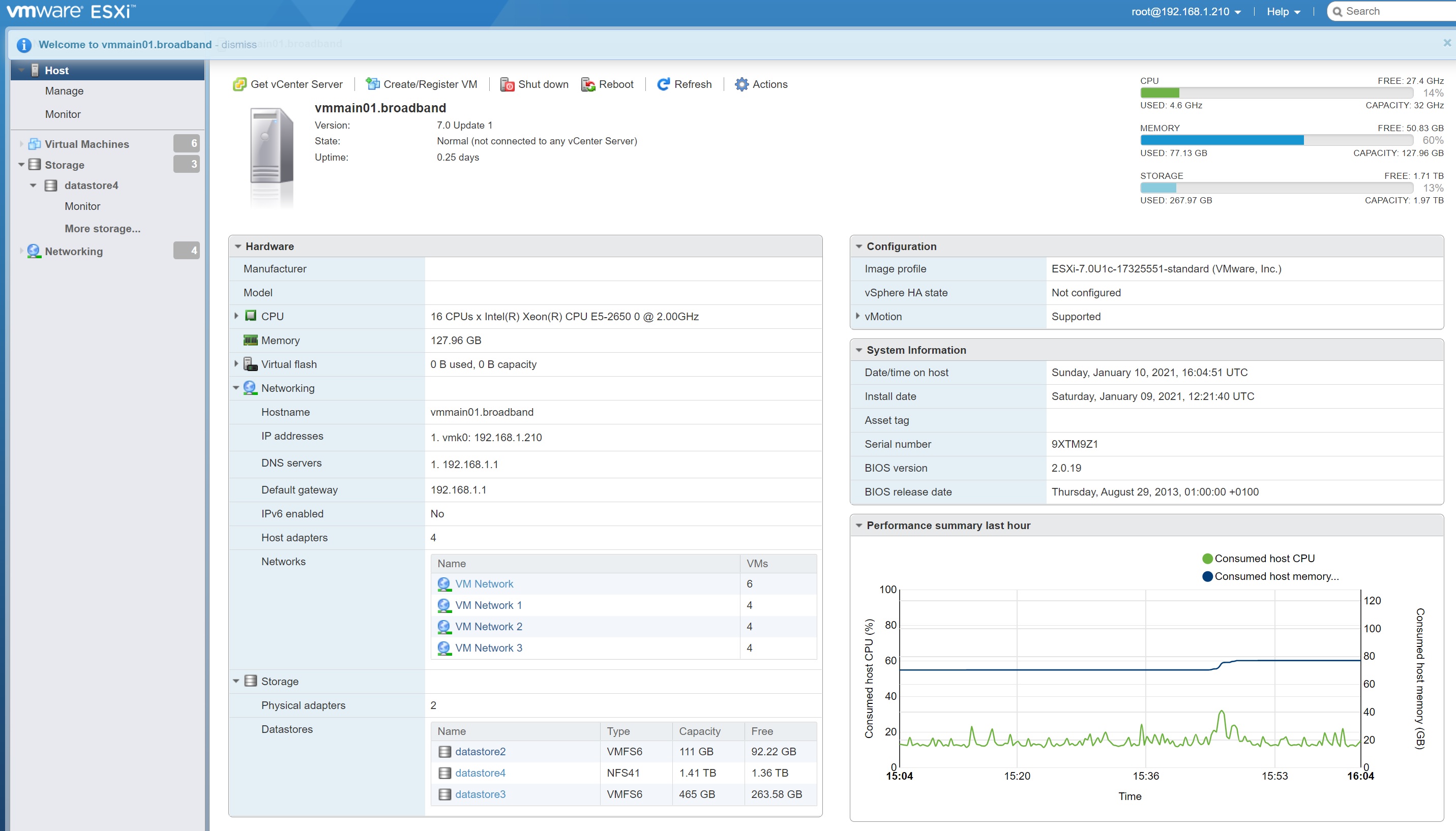

As mentioned above my main ESXi server (which will host the nested ESXi servers) is a DELL R620, I am using the lastest ESXi 7.0U1c release as of 01-2021. I have 120GB and 32 vCPU's to play around with which is more than enough to test Replication and SRM.

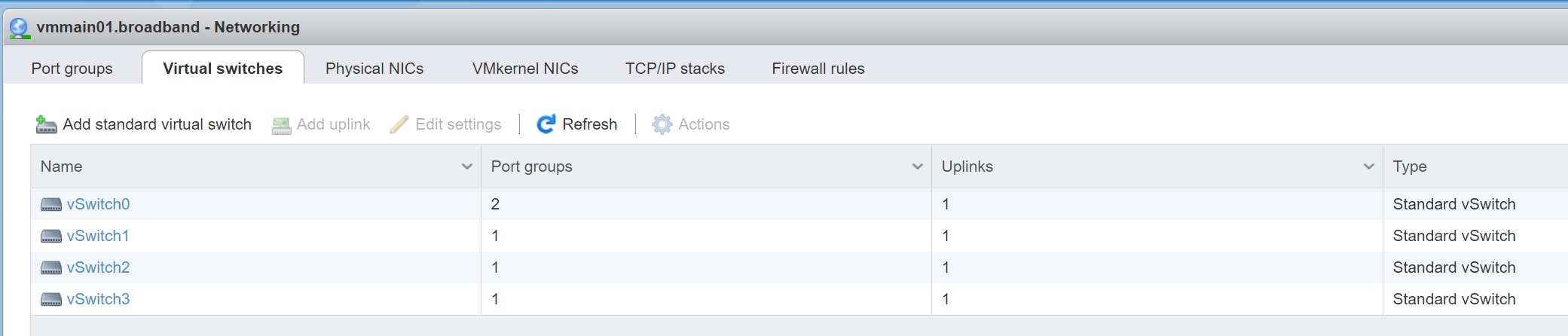

I setup 4 switches each one mapping to each of the 4 physical NIC's that I have, when using nested ESXi servers you must make sure that the security promiscuous mode is set to Accept, this allows the network traffic to flow from the nested ESXi servers through the physical server on to the network.

I then setup 4 port groups that relate back to the physical NIC's by assign the switches we configured above, this makes it easy to add the networks to the nested ESXi servers when I build them

Finally I create a NFS datastore called datastore4 that used the 1.41TB NFS volume, this is were the vCenter servers will be stored, notice the type is NFS41

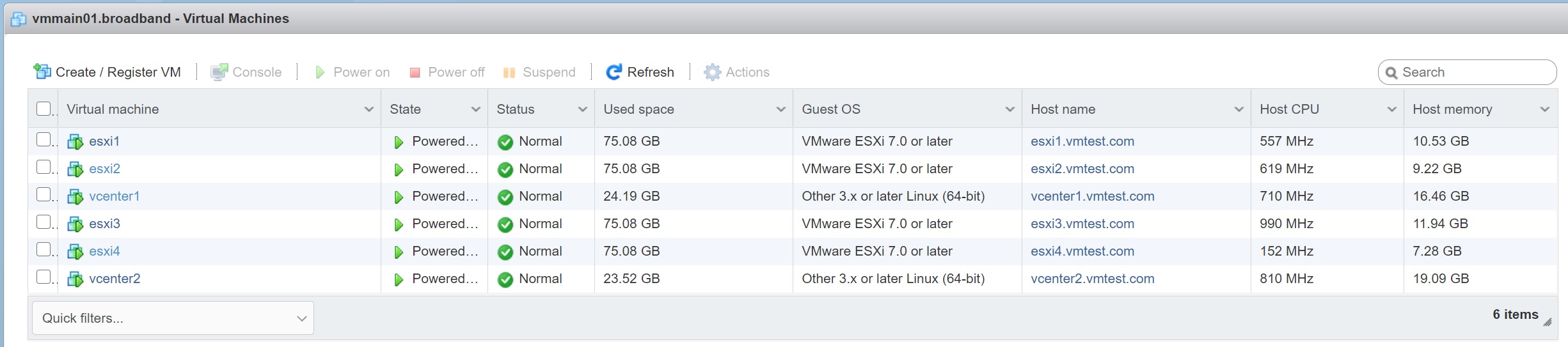

I then created 4 nested ESXi servers, I install two vCenter servers, vcenter1 will manage esxi1 and esxi2 servers and vcenter2 will manage esxi3 and esxi4. This would represent two Data Centers (DC's) that we will replicate and perform Replication/SRM operations. I have another vcenter section on how to install vcenter and configure it.

The nested ESXi server configuration can be seen below, its a simple 4 vCPU with 25GB of memory which is more than enough for this testing environment, notice the 4 networks added which represent the 4 physical NIC's on the physical server, again I use the latest ESXi 7.0U1c.

I have already provided the vCenter installation above but display what was configured by the installation below

Once you connect the nested ESXi servers you will see that the state is now controlled by the vCenter server and you may or may out see the message in blue.

I created a vMotion dynamic switch to which all vmotion activity will travel across, I have left the management network as per the original setup of the server. Notice no additional networking has been setup for the NFS storage as I have tried to keep things simple but you can use other NIC's to reduce load and increase performance.

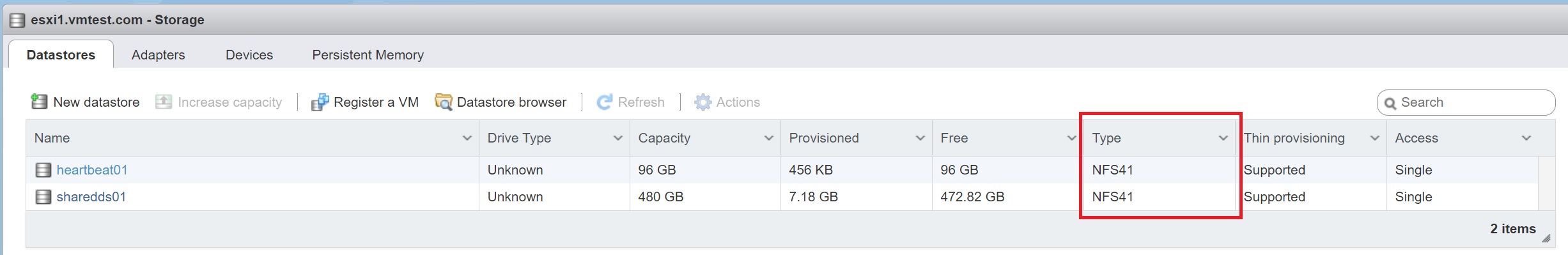

I used the vCenter to add the NFS storage, you should do this before you setup the HA as it requires a heartbeat storage, again you can see the datastores are NFS41. The other datastore will be used for the replication/SRM servers and any test VM's that I need to create to test SRM.

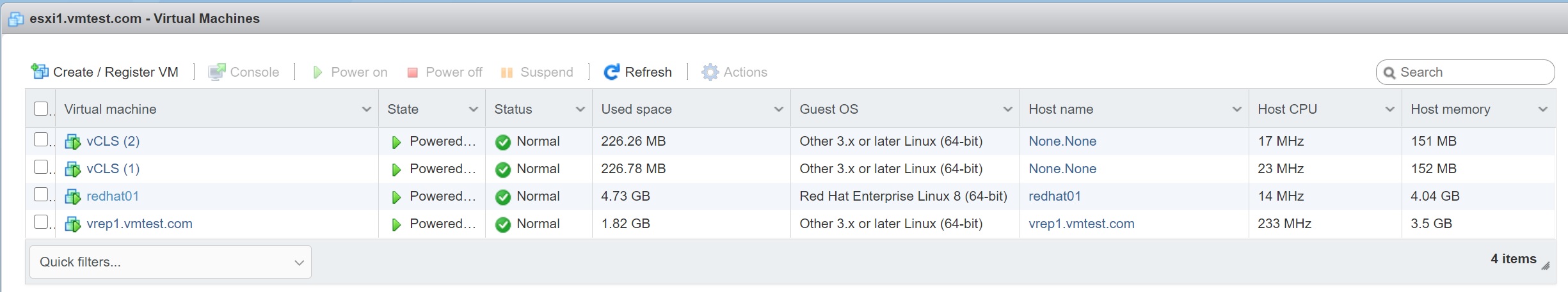

I have also installed a RedHat 8 VM which will be used to test the Replication and SRM, we will see this in the Replication and SRM sections

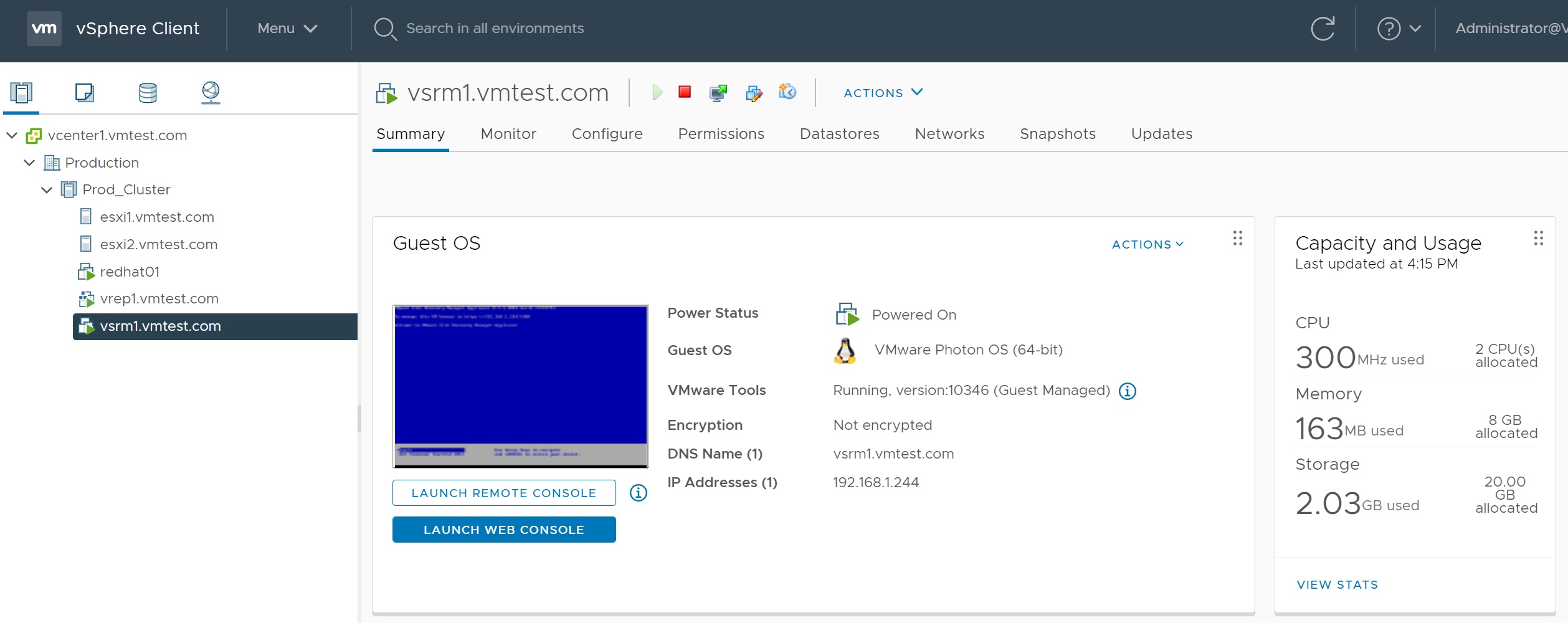

Once we have finished, the Replication and SRM installed can be seen in the vCenter which will look like below, you can see the RedHat 8 VM, the Replication server and the SRM server.

Next lets take a quick look at the vCenter setup, firstly the 2 NFS datastores, one used the heartbeat and the other for the VM's (RedHat 8, Replication server, SRM server), also notice that I created ISO directory to contain any ISO images that I might use to create VM's

You can see the vMotion network I created as mentioned above

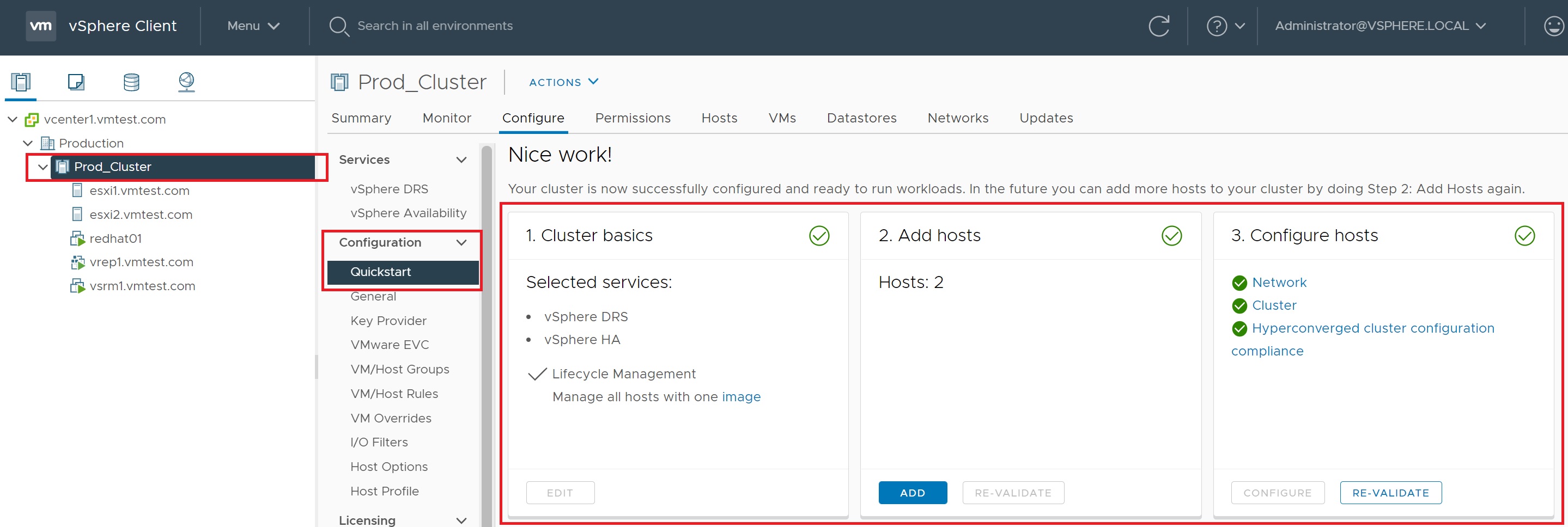

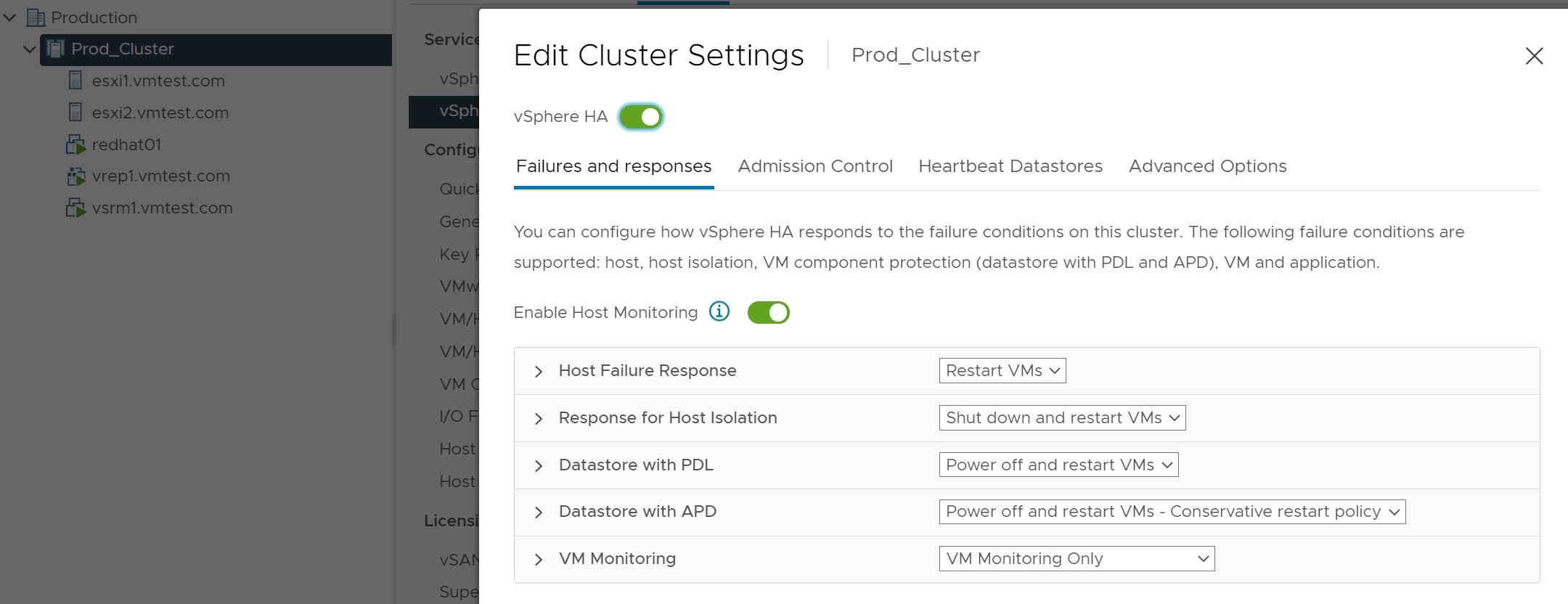

I created a cluster and then added the two nested ESXi servers, the network configuration part is what created the vMotion network in the above screenshot, again I kept everything simple and used as many defaults as I could.

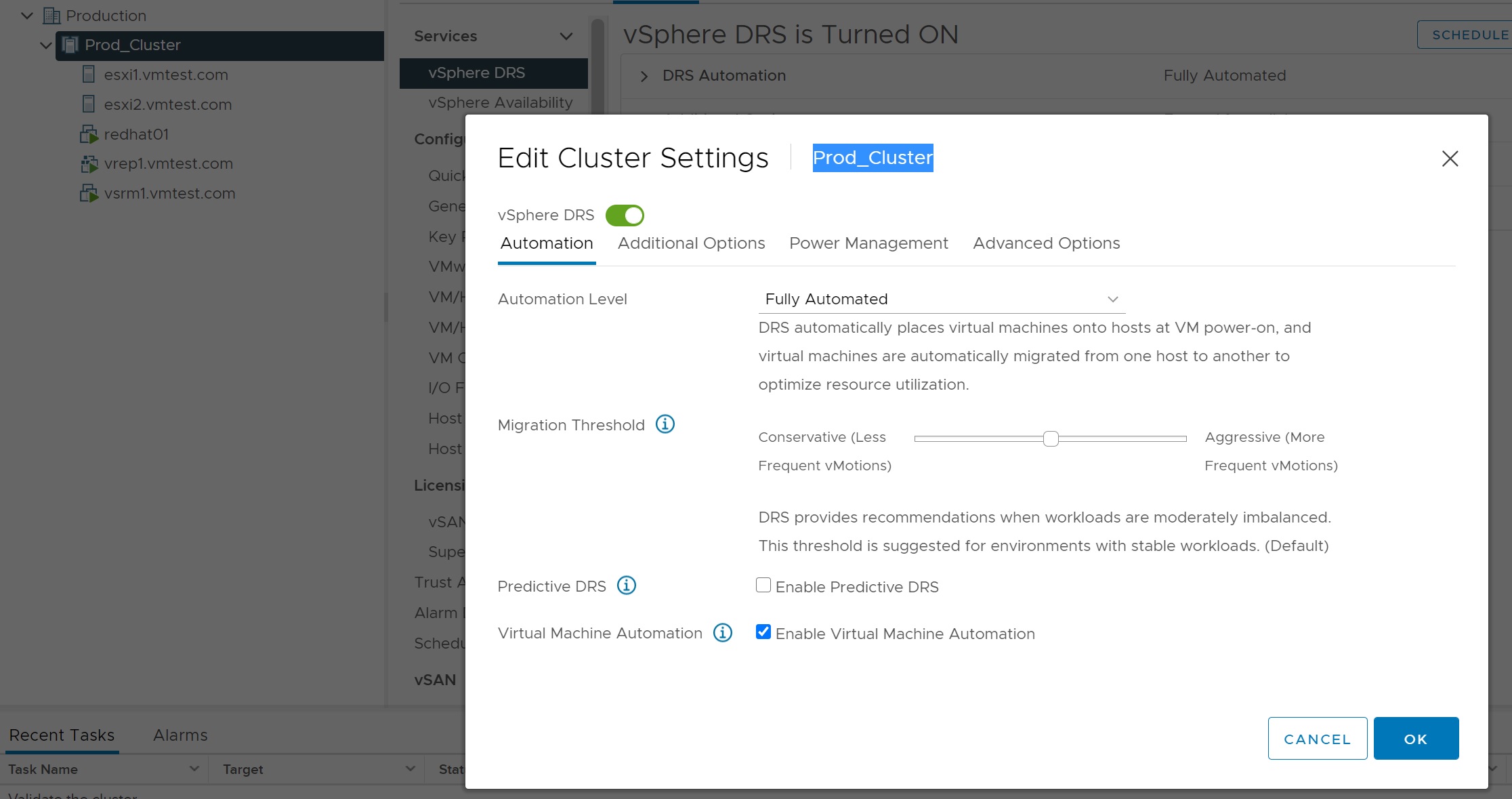

The DRS section again I used the defaults

I also changed a few options here to stop the alerts

I also specified the datastores that can be used to keep the heartbeats of the cluster.

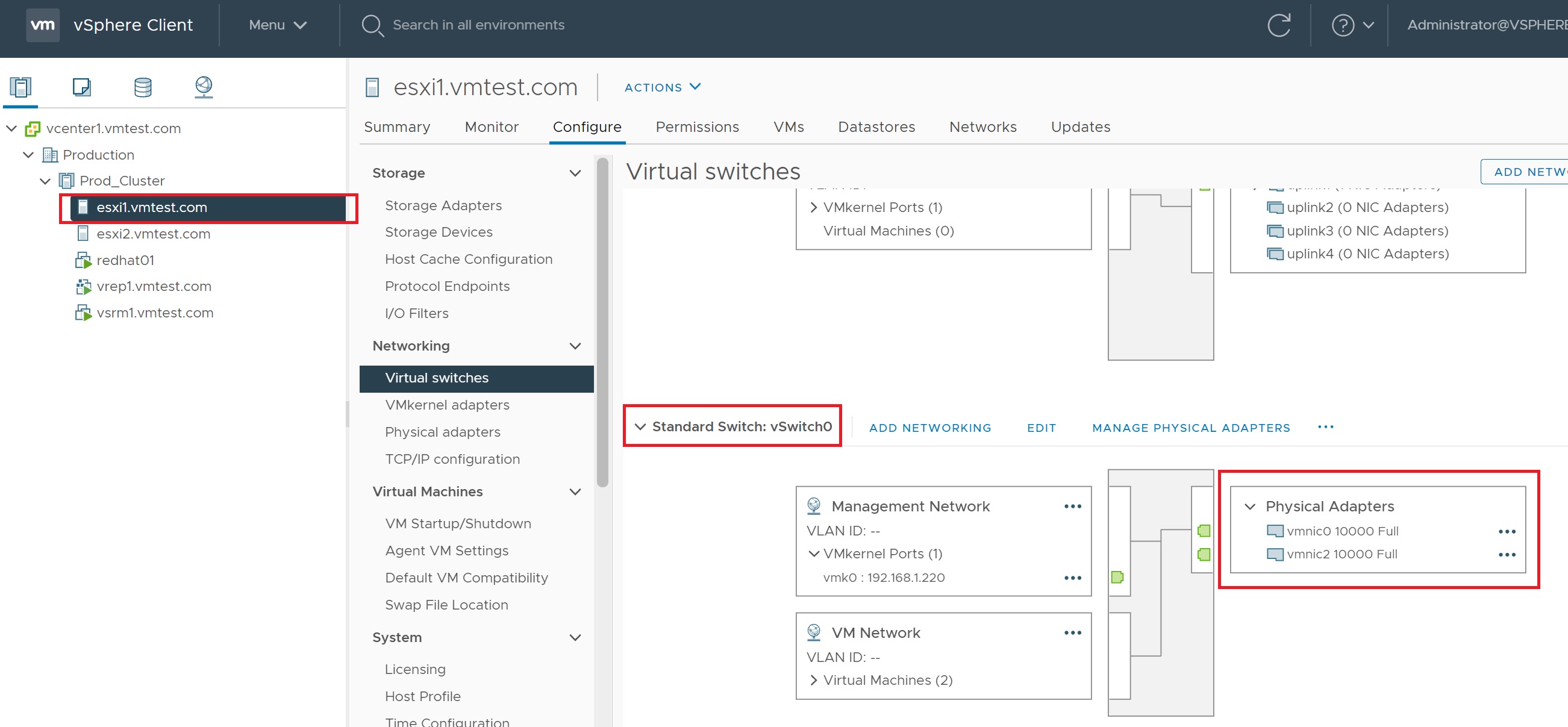

An area that I also had to change was to add an additional NIC to support multiple redundancy for the management network, I was getting HA alerts requiring this to be addressed

Once I added the additional NIC the HA alert went away

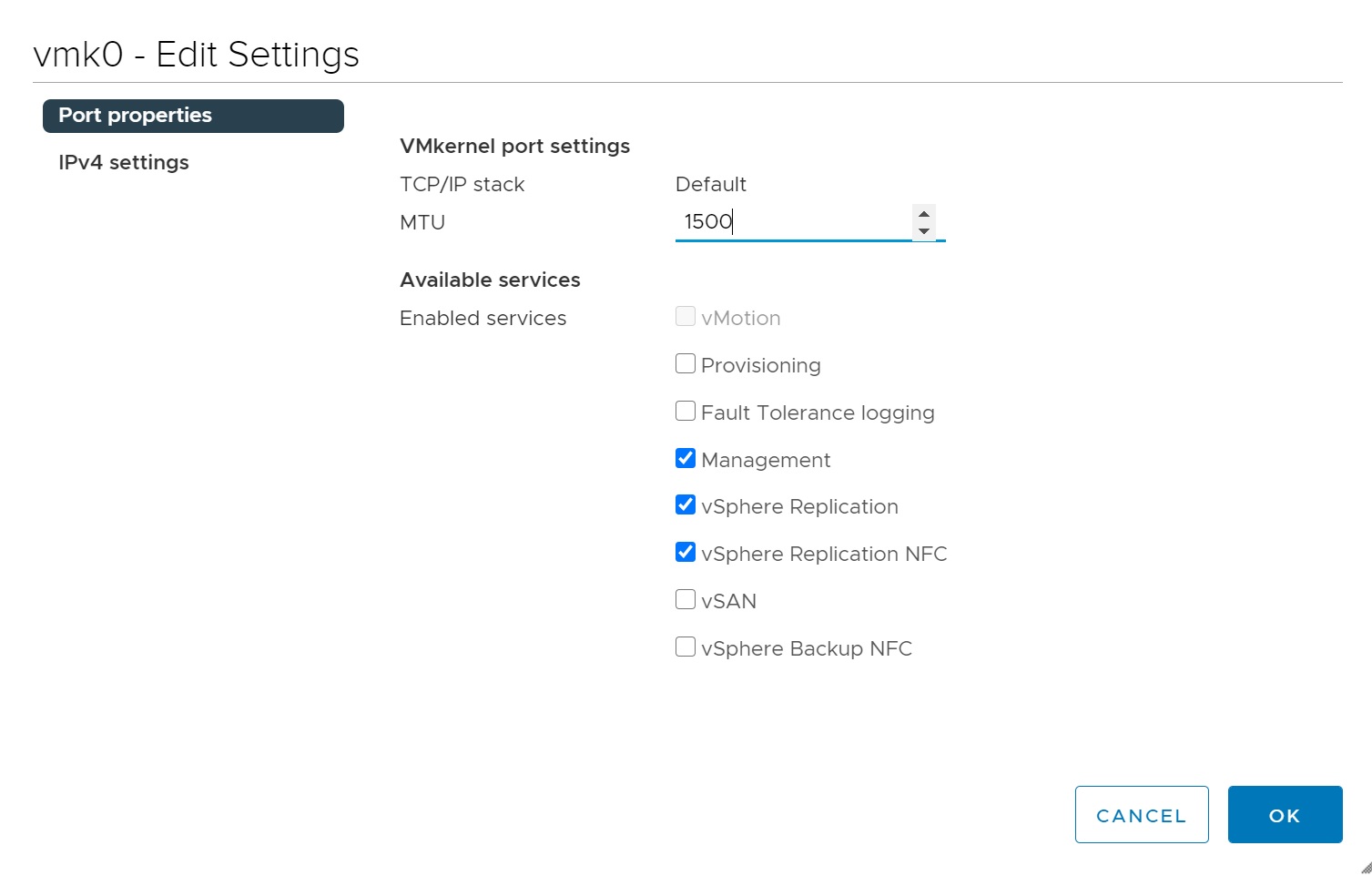

I also used the management network to allow the replication traffic to flow over, notice the vSphere Replication and vSphere Replication NFC services are enabled, you could setup a specific network for this.

The vMotion network has the vMotion service enabled which the vCenter configured for us.